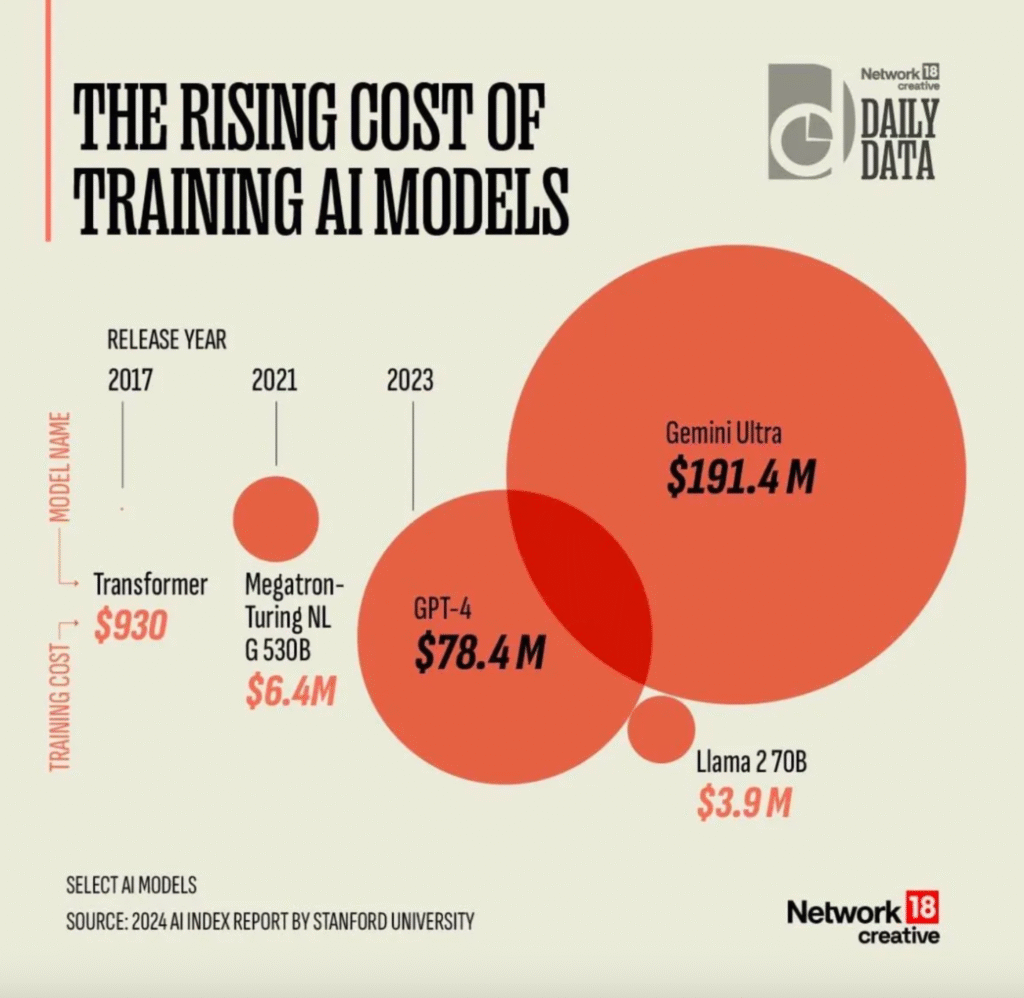

As AI continues revolutionising industries and everyday life, the financial and computational investment required to create state-of-the-art models is increasing exponentially. A recent chart by Network18 Creative, developed from data sourced from Stanford University’s 2024 AI Index Report, just shows how fast the cost associated with training advanced AI models has gone up.

In this post, we discuss the inflationary rise in AI training costs, what drives the inflation, and what it may mean for AI engineering in the years to come.

The Evolution of AI Training Costs

Training AI models used to be considerably cheaper, but times have changed with their increasing complexity, pushing up demand for higher performances. Now, let us look at a few prominent models with their training costs:

- Transformer 2017 — The original Transformer architecture was the first major AI model to make the chart and played a significant role in changing the world of natural language processing. In the year 2017, this model cost $930 to train; hence, it marked the beginning of the AI model boom.

- Megatron-Turing NLG 530B (2021) — Fast forward to 2021, and we see a substantial jump in costs. Megatron-Turing, with 530 billion parameters, costs $6.4 million to train. The exponential increase in the number of parameters marked a critical leap in both capability and investment.

- GPT-4 (2023) — OpenAI’s GPT-4, hailed as one of the most advanced language models in existence, required an astounding $78.4 million for its training costs. This figure underscores the remarkable growth of investment over merely two years. The model’s capacity to execute a diverse range of tasks with impressive accuracy showcases the sophistication inherent in these new-generation models.

- Gemini Ultra (2023) — Perhaps the most striking figure on the chart is for Gemini Ultra, a model trained in 2023, with an eye-popping training cost of $191.4 million. This massive cost reflects not only the increasing scale of AI models but also the computational infrastructure needed to support these developments.

- LLaMA 2 — 70B is a model from Meta, open-sourced in 2023, and it was comparably cheap to train, at only $3.9 million. While significant, this amount is indicative that not all state-of-the-art models carry the incredibly expensive price tag commonly associated with their proprietary counterparts.

Factors Driving the Rising Cost of AI Model Training

1. Scaling Model Size and Complexity

The key drivers of increasing costs seem to be the complexity and scale of AI models. The early generations of AI, such as the original Transformer, were relatively small in terms of the number of parameters. Contemporary models like GPT-4 and Gemini Ultra have hundreds of billions of parameters and require far greater amounts of computing power and other resources to train them.

2. Data Requirements

Training models like GPT-4 and Gemini Ultra require massive amounts of data that need to be cleaned and preprocessed before storage. The resultant infrastructure for this data infrastructure is fairly expensive and computationally intensive, especially as the models start evolving to become multi-modal, handling text, images, and even video inputs.

3. Computing Power and Hardware

While models grow ever more complex, demands on hardware grow exponentially as well. The expense involved in special hardware like GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units) is growing high. Data centres fitted with a thousand GPUs have become necessary to train these behemoth models, and the overall cost has been heavily affected.

4. Energy Consumption

Training AI models is a power-consuming operation. Continuously running data centres, sometimes for weeks or months to train a model, drives energy consumption up and hence increases the overall cost.

5. Research and Development

Included are human resources comprising AI researchers, engineers, and data scientists, making these creations increasingly expensive. The state-of-the-art models require intensive research and development. It usually takes months and sometimes years before the model can be fully trained and launched.

Implications for the Future of AI

1. Barriers to Entry

It will be hard for small companies and startups to enter into this domain because costs for training sophisticated models are rocketing upwards. In that direction, only a well-funded organization can afford to build state-of-the-art models, typically big technology companies and prestigious research institutions. Moving in this direction may lead to only a few players holding power in AI.

2. Open-Source AI: A Counterbalance

However, not everything is lost for the smaller players on the field. The rise of open-source models, like Meta’s LLaMA 2, boasting a much lower cost of training, presents more approachable options. These open-source AI models would let companies tap into advanced technologies without the daunting costs of training models from scratch.

3. Environmental Concerns

The energy consumption associated with training the AI model poses serious environmental concerns. While these models are growing larger, so is their carbon footprint. This has, in turn, raised the demand for greener AI, pushing companies to research ways of making AI training more energy-efficient.

4. Advancements in AI Efficiency

As the future unfolds, the AI community will be committed to developing models that are ever-powerful yet at the same time, enormously efficient. Techniques for model compression, optimized neural architectures, and greener data centres are all to reduce so-called costs for training the AI model, making it accessible to a wider range of organizations.

The cost of training AI models is increasing astronomically, reflecting the tremendous successes and challenges marking the landscape of artificial intelligence. Advanced models, such as GPT-4 and Gemini Ultra, testify to unparalleled capabilities while at the same time demonstrating the scale of financial and computational resources required to increase the boundary of what AI can achieve.

As the field continues to evolve, the AI community must deftly strike a balance between the development of increasingly powerful models and the assurance that innovations remain available, sustainable, and responsible.